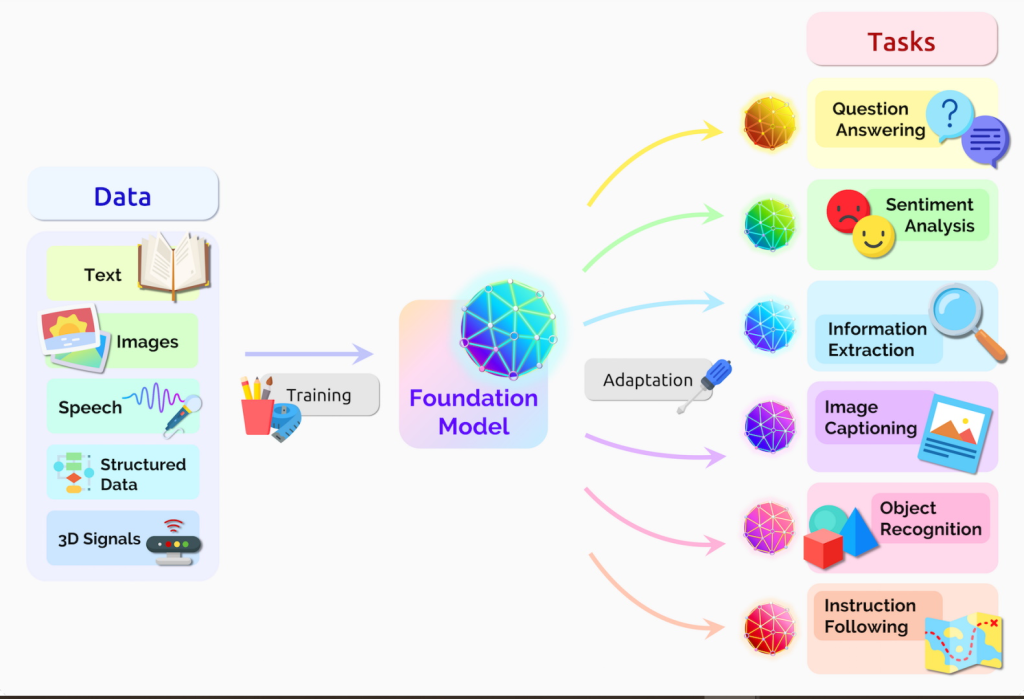

In the realm of artificial intelligence, foundation models have emerged as revolutionary advancements, serving as the bedrock for various natural language processing (NLP) tasks. These models have become vital components of cutting-edge AI systems, facilitating breakthroughs in fields such as language translation, sentiment analysis, question-answering systems, and much more. This article aims to shed light on the concept of foundation models, exploring their importance and the inherent need for their existence.

Understanding Foundation Models

Foundation models, in essence, are large-scale neural networks trained on massive amounts of text data. These models are pre-trained on a diverse range of languages, domains, and sources, enabling them to capture intricate patterns and linguistic nuances. One prominent example of a foundation model is OpenAI’s GPT (Generative Pre-trained Transformer), which has transformed the landscape of NLP applications.

The Need for Foundation Models

- Data Efficiency: Foundation models drastically improve data efficiency in NLP tasks. Pre-training a model on vast amounts of unlabeled data allows it to acquire a broad understanding of language, grammar, and context. Consequently, when fine-tuning these models on specific tasks using comparatively smaller labeled datasets, they exhibit remarkable performance, bridging the gap between labeled data scarcity and the need for accurate models.

- Generalization: Foundation models excel at generalization, enabling them to adapt to a wide array of NLP tasks. Due to their comprehensive pre-training process, they develop a robust understanding of language structures and semantics. As a result, they can effectively transfer knowledge and perform well on a multitude of downstream tasks without requiring extensive fine-tuning for each specific application.

- Multilingual Capabilities: With the global nature of communication, the demand for multilingual AI systems has escalated. Foundation models empower developers to create applications that can seamlessly process and generate text in multiple languages. By leveraging their pre-training on diverse language corpora, foundation models exhibit a remarkable ability to comprehend and generate text in various languages, breaking down language barriers in the field of AI.

- Continuous Learning: Foundation models have the potential to engage in lifelong learning, making them adaptive and capable of staying up-to-date with evolving language patterns and societal changes. By continually exposing these models to new data, they can refine their understanding of language, adapt to emerging terminologies, and incorporate the latest trends. This characteristic enhances their relevance and longevity, ensuring their continued usefulness in dynamic environments.

- Democratizing AI: The advent of foundation models has paved the way for democratizing AI. By providing access to pre-trained models, developers across the globe can leverage these powerful tools without needing extensive resources or expertise in training large-scale neural networks. This accessibility drives innovation, encourages collaboration, and accelerates the development of diverse applications, ultimately benefiting society as a whole.

Conclusion

Foundation models have emerged as pivotal elements in the realm of artificial intelligence, addressing the challenges of data efficiency, generalization, multilingual capabilities, continuous learning, and democratization of AI. Their ability to comprehend and generate text across multiple domains and languages has propelled advancements in numerous NLP tasks. As we continue to witness their potential, foundation models serve as the solid foundation upon which AI systems are built, shaping a future where intelligent machines effectively communicate with humans and revolutionize various industries.

To Learn More:- https://www.leewayhertz.com/foundation-models/