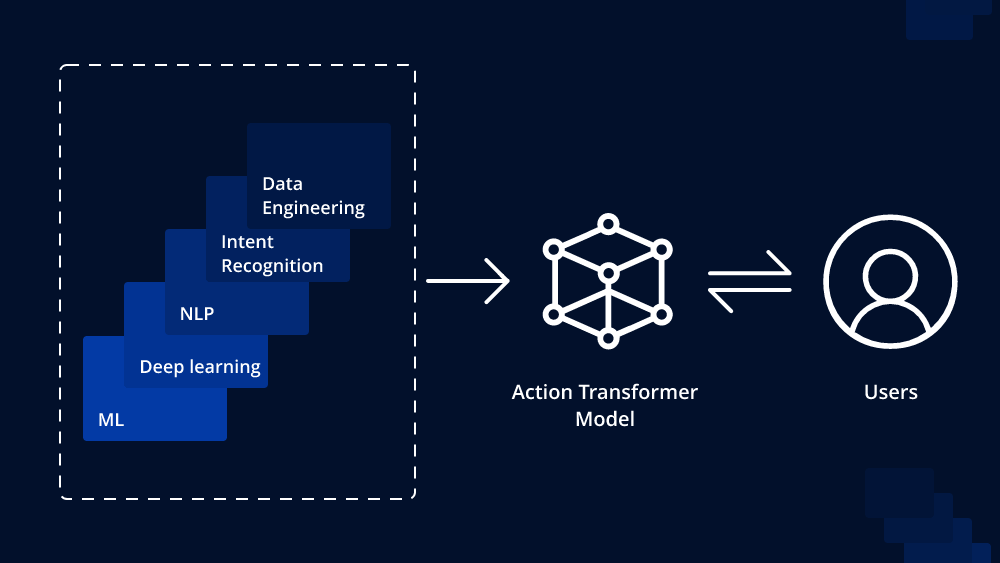

In recent years, transformer models have revolutionized the field of natural language processing (NLP) by achieving state-of-the-art results in various language tasks. The transformer architecture, first introduced in the landmark paper “Attention is All You Need” by Vaswani et al. (2017), has become the foundation for numerous NLP models. One such extension of the transformer architecture is the Action Transformer Model, which aims to incorporate dynamic actions into the language understanding process. In this article, we will explore what an Action Transformer Model is and delve into how it works.

Understanding the Transformer Architecture:

Before delving into the specifics of the Action Transformer Model, it is essential to have a basic understanding of the transformer architecture. At its core, the transformer utilizes a self-attention mechanism to process input sequences in parallel. This mechanism allows the model to weigh the importance of different words in the input text when making predictions. The transformer also uses positional encoding to account for word order, as it does not have an inherent sequential structure like recurrent neural networks (RNNs).

Introducing the Action Transformer Model:

The Action Transformer Model builds upon the transformer architecture by incorporating dynamic actions into the self-attention mechanism. In traditional transformers, the attention weights are static and determined solely by the content of the input sequence. However, in real-world scenarios, understanding natural language often requires reasoning about dynamic actions that may influence the meaning of a sentence or document.

Dynamic actions refer to a set of learned operations or transformations that the model can apply to the input data adaptively. These actions are conditioned on the context of the input sequence and can change the representation of the sequence during processing. By incorporating dynamic actions, the Action Transformer Model gains the ability to perform more sophisticated reasoning tasks and better handle scenarios that involve changes or interactions over time.

How does an Action Transformer work?

- Action Encoding:

In an Action Transformer Model, each dynamic action is assigned a unique identifier, which is known as an action token. These action tokens are added to the input sequence to signal the presence of different actions. For instance, in a question-answering scenario, action tokens might indicate operations like “compare,” “add,” or “retrieve.” - Action-Based Self-Attention:

During the self-attention process, the Action Transformer Model attends not only to the content of the input tokens but also to the action tokens. This enables the model to learn which action is most relevant for each part of the input sequence. The attention mechanism now considers the dynamic actions, allowing the model to focus on the most critical aspects of the input at different stages of processing. - Adaptive Action Application:

Once the model has learned the importance of different actions, it can apply these actions adaptively to modify the representation of the input sequence. This process is often referred to as “action application” or “action execution.” The model can choose to emphasize certain aspects of the input or ignore irrelevant information based on the learned actions. - Dynamic Computation Graph:

With dynamic actions playing a role in the self-attention mechanism, the Action Transformer Model effectively constructs a dynamic computation graph. This graph changes based on the actions’ importance, allowing the model to perform flexible computations at each layer.

Advantages of the Action Transformer Model:

The incorporation of dynamic actions in the transformer architecture brings several advantages:

- Enhanced Reasoning: The model gains the ability to perform complex reasoning tasks by adapting its actions according to the input context.

- Temporal Understanding: Action Transformers can effectively process sequential data, making them well-suited for tasks involving time-dependent interactions.

- Interpretability: Since the model’s attention is conditioned on dynamic actions, it provides better interpretability, allowing us to understand the reasoning process better.

Conclusion:

The Action Transformer Model extends the transformer architecture by incorporating dynamic actions, leading to improved reasoning and temporal understanding in language understanding tasks. By introducing adaptability into the attention mechanism, this model represents a significant advancement in the field of natural language processing. As research in transformer-based models continues to evolve, the Action Transformer Model holds promise for addressing even more complex language understanding challenges in the future.

To Learn More:- https://www.leewayhertz.com/action-transformer-model/