In the realm of natural language processing (NLP) and machine learning, transformers have revolutionized the way we handle sequential data, such as text. With their ability to model long-range dependencies, transformers have become the go-to architecture for various NLP tasks. However, a new advancement called the Decision Transformer takes this technology a step further, enhancing it with decision-making capabilities. In this article, we will explore what the Decision Transformer is and how to use it within the context of a traditional transformer.

Understanding the Decision Transformer

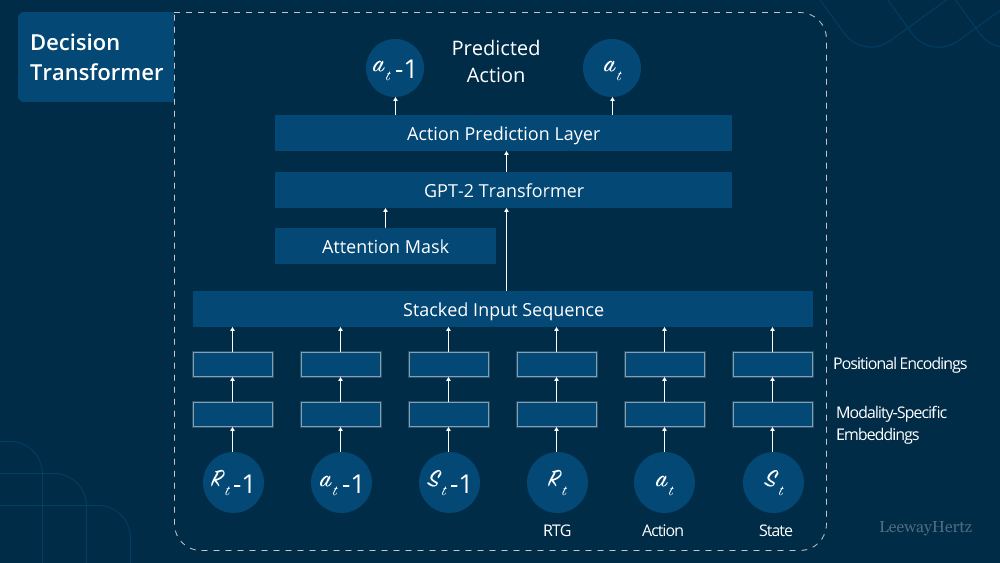

The Decision Transformer is an extension of the standard transformer architecture designed to incorporate decision-making mechanisms during its training process. Introduced as a research breakthrough in recent years, the Decision Transformer introduces the notion of making decisions while processing sequential data.

In the context of natural language processing, a Decision Transformer is trained to make optimal decisions at each step of the input sequence. These decisions can be binary choices or multi-class decisions, depending on the specific application. By enabling the model to make decisions, it gains the ability to actively influence its future predictions, leading to more accurate and contextually appropriate results.

Incorporating Decision Making

To incorporate the Decision Transformer into a traditional transformer architecture, you need to make some key modifications during both the training and inference phases.

- Dataset Preparation: The first step is to prepare the dataset with decision labels. This means annotating the data with decision points where the model will be required to make a choice. These decision points will have corresponding decision labels indicating the optimal decision at that point.

- Decision Heads: In the transformer architecture, decision heads are added to handle the decision-making process. Decision heads are additional layers in the model that take the hidden states as input and predict the optimal decision based on the current context.

- Decision Loss: During training, the Decision Transformer employs a decision loss function in addition to the traditional language modeling loss. The decision loss ensures that the model learns to make accurate decisions at each decision point, in addition to generating coherent and contextually relevant text.

- Sampling Decisions: When using the Decision Transformer for text generation or inference, you can employ different strategies for making decisions at each decision point. One approach is to sample decisions from the decision distribution predicted by the model. Another approach is to select the most probable decision at each step.

Benefits of the Decision Transformer

The Decision Transformer offers several advantages over traditional transformers:

- Interpretability: Decision Transformers provide greater interpretability, as the model’s decisions can be analyzed to understand the reasoning behind its predictions. This is particularly useful in critical applications where the decision-making process needs to be transparent and justifiable.

- Contextual Adaptation: By incorporating decision-making, the model can adapt its predictions based on specific contexts, leading to more accurate and contextually relevant outputs. This is especially valuable in scenarios where a single decision can significantly affect the subsequent predictions.

- Few-Shot Learning: Decision Transformers tend to excel in few-shot learning scenarios, where the model has limited data to learn from. The decision-making mechanism allows the model to leverage its existing knowledge to make informed decisions even with minimal training data.

Applications of Decision Transformers

The Decision Transformer has found applications in various NLP tasks, including but not limited to:

- Conversational AI: In chatbots and conversational agents, Decision Transformers can be used to make decisions on how to respond to user inputs, resulting in more interactive and context-aware conversations.

- Machine Translation: Decision Transformers can decide on the best translation for ambiguous phrases, enhancing the quality of machine translation systems.

- Question Answering: By making decisions on which pieces of information are most relevant, Decision Transformers can improve the accuracy of question-answering systems.

- Summarization: Decision Transformers can decide on the most important sentences or content to include in a summary, resulting in more concise and informative summaries.

Conclusion

The Decision Transformer is a powerful extension of the traditional transformer architecture that introduces decision-making capabilities. By incorporating decision heads, decision loss, and proper dataset preparation, you can integrate the Decision Transformer into your NLP projects to achieve more accurate and contextually relevant results. As this field of research continues to evolve, Decision Transformers are expected to play a pivotal role in enhancing various NLP applications across different industries.

To Learn More:- https://www.leewayhertz.com/decision-transformer/