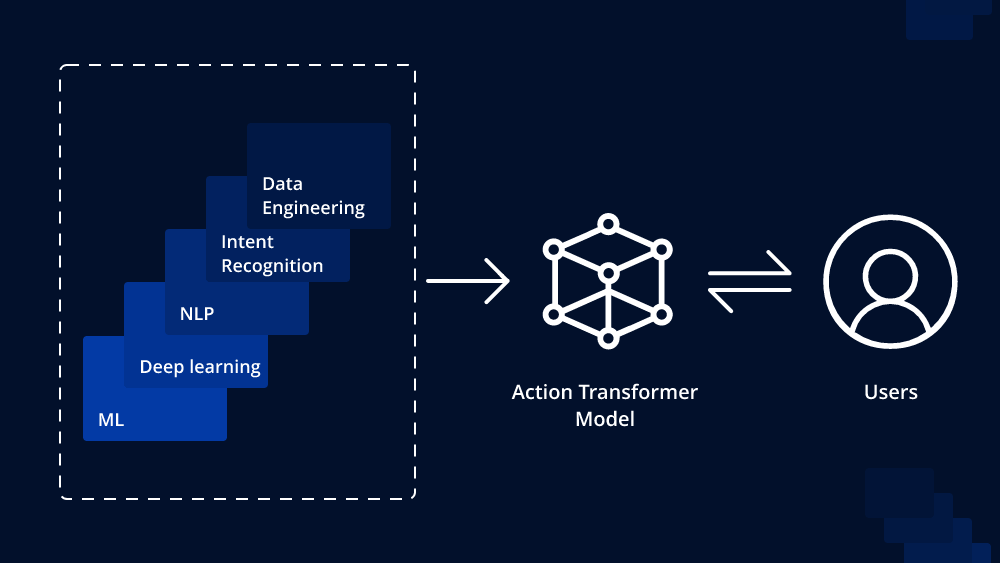

Artificial Intelligence (AI) has made significant advancements in natural language processing, computer vision, and many other domains. One of the remarkable achievements in the field of natural language processing is the development of transformer models, which have revolutionized tasks like language translation, text generation, and sentiment analysis. In recent times, researchers have extended the capabilities of transformer models to handle complex tasks by introducing action transformer models. In this article, we will explore what an action transformer model is and how to implement one.

Understanding Transformer Models

Before diving into action transformer models, let’s briefly understand the concept of transformer models. Transformer models are deep learning architectures that excel in capturing long-range dependencies in sequential data, making them highly suitable for natural language processing tasks. The transformer architecture utilizes self-attention mechanisms, enabling it to focus on different parts of the input sequence during processing. This attention mechanism allows transformers to outperform traditional recurrent neural networks (RNNs) on various sequential tasks.

What is an Action Transformer Model?

An action transformer model is an extension of the traditional transformer that incorporates the notion of actions or operations on items in a sequence. This extension makes it particularly useful for tasks involving step-by-step decision-making processes or those that involve interactions between elements in a sequence. Action transformers can be applied to a wide range of applications, including but not limited to dialogue systems, procedural text understanding, and algorithmic decision-making.

Key Components of an Action Transformer Model

- Sequence Encoding: Like the standard transformer, an action transformer starts by encoding the input sequence. This encoding step involves transforming each element of the input sequence into high-dimensional vectors, allowing the model to process them effectively.

- Action Representation: In an action transformer, actions are represented as special tokens added to the input sequence. These action tokens act as indicators to the model that a particular step or operation is being performed.

- Action Embedding: Action tokens are embedded into the model’s input using action embeddings. These embeddings are learned during the training process and provide the model with information about the actions being performed.

- Action Attention: The attention mechanism in an action transformer considers both the input sequence and the action embeddings. This enables the model to selectively attend to relevant elements of the input sequence based on the current action being performed.

- Action Decoding: After processing the sequence with the action embeddings, the action transformer generates outputs or predictions based on the interactions between the elements and the actions performed.

Implementing an Action Transformer

Now, let’s explore how to implement an action transformer. For this, we will use Python and the popular deep learning library PyTorch.

Step 1: Install Required Libraries

pip install torch

pip install transformers

Step 2: Import Libraries

import torch

from transformers import AutoTokenizer, AutoModelForSeq2SeqLM

Step 3: Load Action Transformer Model

model_name = "your-pretrained-action-transformer-model"

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModelForSeq2SeqLM.from_pretrained(model_name)

Step 4: Prepare Input Sequence and Actions

sequence = "Your input sequence here"

actions = ["action_1", "action_2", "action_3"]

Step 5: Encode Input Sequence and Actions

input_text = " ".join([sequence] + actions)

inputs = tokenizer(input_text, return_tensors="pt")

Step 6: Generate Outputs

outputs = model.generate(**inputs)

generated_text = tokenizer.decode(outputs[0], skip_special_tokens=True)

Conclusion

Action transformer models are an exciting extension of the traditional transformer architecture, enabling the integration of actions into sequence processing. This added capability opens up new possibilities for complex natural language processing tasks that involve step-by-step decision-making or interactions between elements. By following the implementation guide provided above, researchers and developers can explore the power of action transformers and experiment with various applications in the realm of AI and NLP. With continuous advancements in transformer-based models, we can expect even more innovative approaches to tackle complex tasks in the future.

To Learn More:- https://www.leewayhertz.com/action-transformer-model/