Building a GPT (Generative Pre-trained Transformer) model: a feat at the forefront of artificial intelligence and natural language processing. This intricate process combines cutting-edge technology, colossal datasets, and computational prowess to fashion a model capable of remarkable feats in understanding and generating human-like text.

The Data Journey: From Collection to Preprocessing

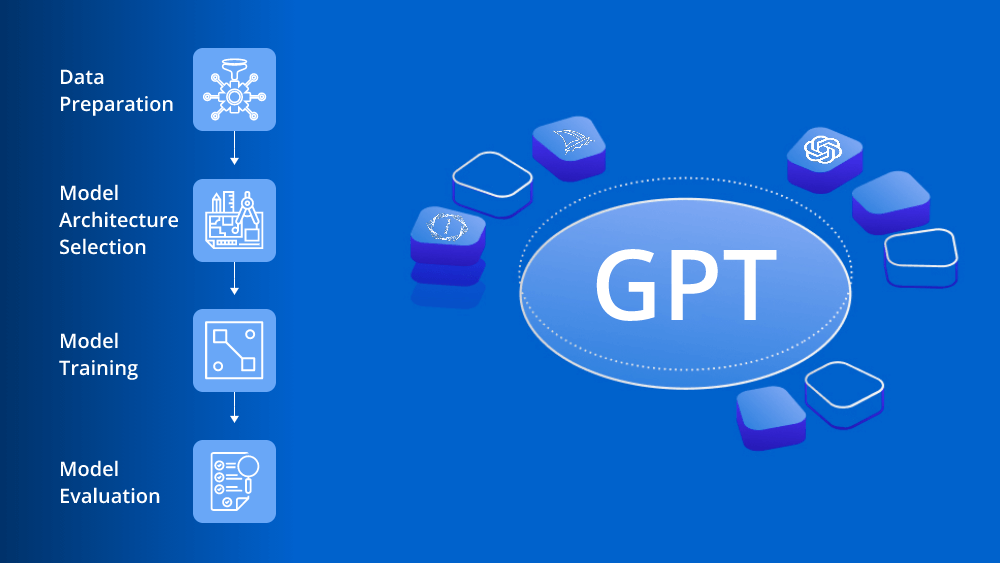

At the heart of a GPT model lies a vast reservoir of text data drawn from an array of sources spanning books, articles, websites, and more. This data, collected in copious amounts, undergoes meticulous preprocessing. It’s cleaned, tokenized, and formatted, readying it for the model’s voracious appetite for learning.

Architecting Intelligence: Training the GPT Model

The architecture of a GPT model is meticulously crafted, determining the intricate web of layers, attention mechanisms, and parameters. Training this mammoth model demands an exorbitant amount of computational muscle—GPU and TPU clusters bear the weight of this Herculean task. Iteratively adjusting millions of parameters, the model learns the nuances of language, predicting the next word in a sequence with uncanny accuracy.

Refinement and Customization: Fine-Tuning and Optimization

Once the foundation is laid, fine-tuning beckons. This stage involves training the model on domain-specific or task-specific data, tailoring its capabilities for specialized use cases. An ongoing pursuit of optimization refines the model’s performance, striving for efficiency and excellence.

The Crucible of Evaluation: Assessing GPT’s Prowess

The model’s mettle is tested and measured through stringent evaluations. Metrics like perplexity gauge its ability to predict text fluently, while qualitative assessments discern the quality of generated text. The model is put through its paces on unseen data, ensuring its adaptability and reliability.

Deploying Power: From Development to Real-World Use

A triumphant model is primed for deployment in diverse applications. However, this is merely the beginning—continuous monitoring, updates, and enhancements are crucial to maintain its prowess amidst ever-evolving language patterns and user needs.

Navigating the Challenges: Pioneering the Future of GPT Models

Yet, the journey isn’t without obstacles. Enormous computational demands pose accessibility barriers, while ensuring data quality and mitigating biases remain ongoing challenges. Ethical considerations surrounding responsible use and potential misuse cast a critical eye on these AI marvels.

In Conclusion: GPT Models as Harbingers of AI Evolution

The construction of a GPT model orchestrates a symphony of technological prowess and data finesse. Despite its complexities, it stands as a testament to humanity’s strides in AI and natural language processing. As these models continue to evolve, they promise unparalleled advancements and applications across industries, reshaping the very fabric of human-AI interaction.